7 teams are competing in the Ultimate Coder Challenge where showcase applications are being built for a Lenovo Yoga 13 to demonstrate the Intel Perceptual Computing hardware.

I’m involved with the judging of the Ultimate Coder event and every week you’ll find an update from me as I analyze the teams progress. This is week 4 and this week there are some great videos, interesting artwork, honesty and a ton of coding to enjoy. Some, however, are still to incorporate any use of gesture control in their apps. Do they have time?

You can find all our Ultimate Coder posts here

Our Ultrabook software developer resources are here

All our Perceptual Computing posts here

Peter O Hanlon

Peter O Hanlon

Peter is the guy to follow if you’re into coding for Windows Presentation Foundation and although we teased him in last weeks video, here’s a hat-tip to you Peter because you’re really providing valuable information for other coders out there. As a reminder, Peter is creating Huda, a photo editing app. In his very honest post this week he admits to instability, frustration at the state of his UI and concerns with speed but he also discusses solutions. He also reveals how he’s going to package the original photo and the filters so that they are ‘playable.’ Effectively it’s a database of ordered filter edits that are called every time the original photo is opened.

Thought: Cycling through a lot of filters using gestures could take a lot of time. Gestures need to add value to the user and I understand that these could be used in lie-back mode but what about speed, efficiency and creativity – one of the key features of your app. In my experience, creative people don’t want barriers.

Peters full update, including code samples, is here.

Infrared 5

These guys had a game concept and artwork that was easy to get excited about in the early stages of the competition which could be a lesson to learn for anyone developing in a social and open way like this. Kickstart your project with some good teasers! Catapault Revenge is coming along and there’s a playable demo available which is more responsive than I had expected. I couldn’t get my Android controller to steer the flight though and as I don’t have the Perceptual Computing hardware here (it’s coming soon!) I can’t test any gesture input.

For developers taking a look at the Perceptual Computing SDK for the first time, read the first few paragraphs from the Infrared 5 update this week to learn about some limits, and then think about how you can break those limits like Infrared 5 is planning. They’re making use of OpenCV to take things a step further, hopefully. You can read the full update, with code samples, here.

Lee Bamber

Lee Bamber

Lee provided us with an inspiring and exciting video last week and this week he does the same by placing himself inside a virtual conference call. Lee takes the time to answer my question about how he is re-creating the image on the receiving end. He’s literally taking a 320×240 grid of Z-axis data from the cam and carrying that, with the original color information, over the network. One assumes that this data could be compressed in an MPEG-like fashion with an extra Z-layer attached. Is there a standard for true (not stereoscopic) 3D video transfer yet? Read Lee’s post and watch the great videos here.

Eskil Steenberg

Want to learn about implementing API design in C? Eskil has you covered in his Week 4 update. Buttons, sliders and triggers are discussed in depth but what does this all have to do with Perceptual Computing? Eskil is working towards implementing that on top of his Betray UI toolkit but for the time being he’s deep in the details of the UI toolkit itself. We’ve mentioned before that submissions for the Ultimate Coder challenge need to be good showcase applications for Perceptual Computing so let’s hope that Eskil has enough time. Check out his code samples in the post here.

The Code Monkeys

The Code Monkeys

‘UserLaziness’ on laptop touchscreens is something I’ve discussed with many people over the last year. Most people that haven’t tried it don’t really get it. Yes, there are times when it’s counter-productive to use the touchscreen, but there are also lazy style inputs that are quicker than a touchpad and then there are the touch scenarios that aren’t easy but bring productive or enjoyable advantages. [My article and video on the subject is here.] When I’m working I use a combination but always interspersed with changes in posture or method. In a gaming scenario that change in posture or method might not come for many minutes (or hours if you’re good) and that’s the issue that the Code Monkeys talk about this week. For a game to work with gesture control, it needs to give the user the feeling that it’s worth it. Check out the Code Monkey post and video to find out how they’re tackling the ‘4-minutes of Minority Report’ issue here.

Sixense

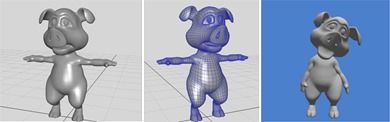

Sixense have an excellent post this week which includes two videos that any lay-person can understand – an on-screen puppet controlled by hand gestures. This is great progress from Sixense, includes a lot of artwork, and a pig, who reminds me of Piggley Winks! Question to Sixense: Are we seeing real-time response while running on an Ultrabook in your video?

The full Sixense week 4 update, with code and more, here.

Simian Squared

These guys, like Sixense, are putting a lot of time into quality artwork, something that the single-person teams just won’t have the resources to do. While it’s important for a showcase product the judges will be taking this manpower issue into account although it’s difficult not to get excited when you see nice renderings, I admit. I was hoping for a video this week because I find this project fascinating and the most challenging of all the projects in the competition. It’s a simulator that will have to work in real-time, in 3D, with constant gesture input to a spinning, flexible object. I can’t imagine the amount of data that’s being crunched in real-time on both the Perceptual Computing hardware and the software that’s using it. Simian Squared promise a video next week and a playable demo by GDC (in 2 weeks.) Read their update and view the artwork here.

Summary

The seven teams appear to be in completely different places in terms of priority so I hope it all starts being threaded together soon. Feature freeze has to come next week which then gives the teams 3 weeks (including the GDC break week) to get everything polished up. The judges will be getting the hardware soon too so watch out for some fun posts around that.

Important links for the Intel Ultimate Coder Challenge: Going Perceptual Competition

- Intel competition Website

- Join everyone on Facebook

- Competitors list and backgrounder

- Twitter stream #ultimatecoder

- All our perceptual computing coverage can be found here.

- All posts about this and the previous software competition can be found here

Full disclosure – We are being compensated for content related to the Ultimate Coder and to attend an Intel event. We have committed to posting at least once per week on this subject and judging the final applications. All posts are 100% written and edited by Ultrabooknews.